Rage Against the Machine Learning

(Or: How Fancier Models Don’t Always Make Better Systems)

I once ordered a graphic tee titled “Rage Against the Machine Learning.” It arrived fast, but with a typo. Fine, I thought. I’ll get a replacement. That one had a different typo. Sneaky AI, striking again.

That’s a perfect metaphor for most AI today: clever in theory, flawed in practice.

The Problem: PEBDAP — Problem Exists Between Demo and Production

Everyone celebrates cool models – ChatGPT, Claude, Gemini, Grok, fine-tuned models, you name it. But many forget: building a LLM-powered app is the easy part. Delivering a reliable, business-driving system is where the real work begins. As Microsoft’s Mo Steller puts it: “Modeling is the smallest slice… the other 90% is operationalization.”

Not convinced? Gartner says only 54% of AI projects models ever make it to production, despite 80% of execs predicting business value from AI automation. And those that do make it to production? Over 80% fail!

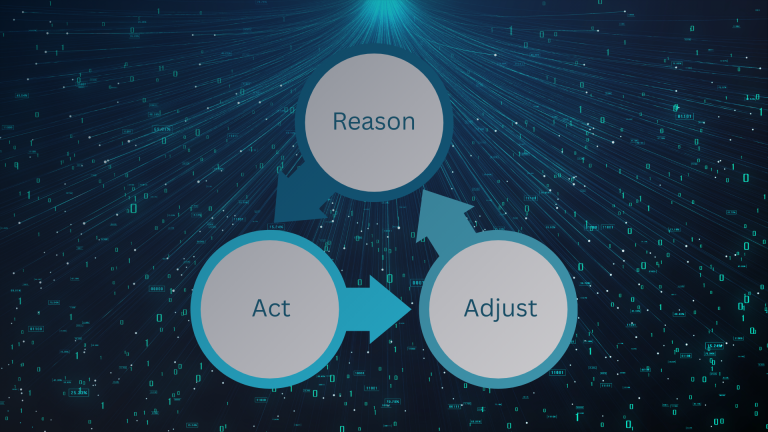

Build It Like It’s Going to Production

The biggest mistake I see? People build AI demos like science fair projects: flashy, one-off, and totally unprepared for the real world. But if your prototype actually works, someone’s going to ask, “Can we ship this?” And suddenly, your hacked-together prompt becomes a liability. That’s why you should treat every AI app like it’s headed to production from day one.

That means thinking about versioning, monitoring, data pipelines, user access, latency, cost, and edge cases before you go live. Otherwise, you’re not building a system; you’re just gambling with a clever API call.

Because if you don’t build it right from the start, the AI will rage against you!