Security-First Coding: Engineering for Vibe Coders

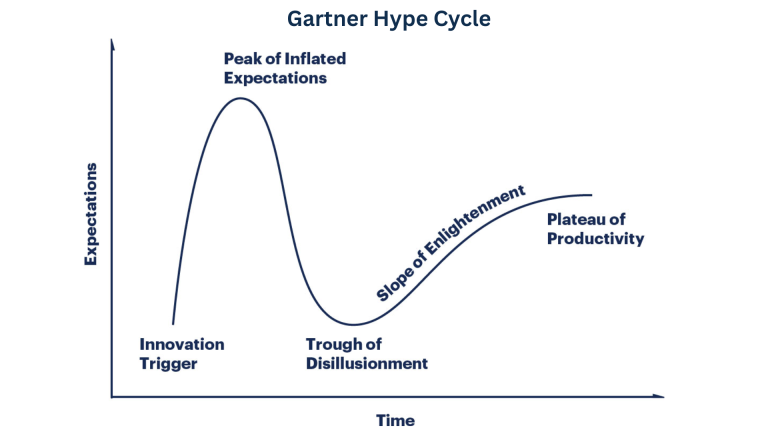

If you’ve ever used an AI coding assistant and felt the rush of seeing your prototype actually run, you’re not alone. The rise of “vibe coding” has made it possible for anyone to spin up a functioning app or API in an afternoon. But beneath that glow of instant gratification lurks something every experienced developer eventually learns: what works isn’t always safe.

Security isn’t something you bolt on later. It’s a mindset that should shape how you plan, prompt, and design your prototype before you write the first line of code. In this first article of the Engineering for Vibe Coders series, we’ll unpack what “security-first” really means, and the handful of areas that can make or break your project’s future.

1. Think about who and what you app touches

Before you open your AI tool, take ten minutes to map the basic data flow:

- What information are you collecting?

- Where does it come from?

- Where is it going?

- Who has access to it?

Even if you’re “just testing,” many prototypes end up with real data: email addresses, customer notes, maybe even payment info. Every one of those details represents a potential risk surface.

🟢 Pre-prototype habit:

Draw your system. Circle anything that looks like personal or sensitive information. If you can’t anonymize or fake it during prototyping, you’re not building safely.

2. Never hard-code secrets (even “just for now”)

AI-generated snippets love to show examples with API keys or tokens inline. Vibe coders often copy them without thinking. Those credentials end up in version control, shared screenshots, or even public repos, where automated bots harvest them within minutes.

🟢 Pre-prototype habit:

Set up a basic secrets manager (even a .env file excluded from Git) before you start. Tell your AI assistant where to store credentials securely instead of letting it guess. (And while we’re talking about Git, make sure you understand .gitignore.)

3. Validate every input especially if AI helped write it

When you’re vibe coding, you tend to trust what “looks right.” But AI models can produce code that accepts unsanitized input or assumes trusted users. That’s the seed of injection attacks, malformed data, and subtle logic bugs.

🟢 Pre-prototype habit:

Decide early what “good” input looks like: length, format, type. Add checks and error handling in your scaffolding before you wire in the UI or API.

4. Plan for least privilege not maximum convenience

When you connect to a database or cloud resource, your instinct is to “make sure it works.” So you use admin credentials. Congratulations, you’ve just given your prototype the power to destroy production data if it ever crosses paths.

🟢 Pre-prototype habit:

Create a separate test database, bucket, or account with limited permissions. Assume your prototype will break, and design the damage radius to be small.

5. Understand how your AI tool might leak data

Many AI assistants store prompts, responses, and context for retraining. If you paste real customer or internal data into those prompts, you’ve created an unintentional data-sharing pipeline.

🟢 Pre-prototype habit:

Check your tool’s privacy policy before using it with real data. Use placeholders or synthetic data during development.

6. Design for safe defaults

Security isn’t just about blocking threats; it’s about starting safe. When defaults are open or permissive, users and developers rarely tighten them later.

Examples:

- Default to “deny” on unknown requests.

- Require authentication even in dev mode.

- Log failed access attempts.

- Mask sensitive data in logs by default.

🟢 Pre-prototype habit:

When your AI assistant generates config files (e.g., Flask, FastAPI, Express), read the comments. Set secure defaults now before the code spreads across multiple environments.

7. Start with a mental checklist

Even for quick prototypes, take a moment to run through a simple pre-build checklist:

| Checklist Item | Why It Matters |

|---|---|

| Have I defined what data is sensitive? | Prevent accidental exposure later |

| Are my secrets stored outside code? | Stop credential leaks |

| Am I validating input before use? | Reduce injection and logic flaws |

| Am I using least privilege for services? | Limit blast radius |

| Have I read the AI tool’s data policy? | Prevent data leakage |

| Are my logs safe to share? | Avoid hidden PII leaks |

If you can check those boxes before writing code, your prototype will be more resilient than many production systems.

8. The mindset shift: from make it work to make it safe enough to test

Security-first coding isn’t about paranoia; it’s about respecting what you’re building.

Even in a prototype, design choices solidify quickly, especially when you later hand the project to someone else or connect it to real users.

Here’s the rule of thumb:

The habits you form in your prototype become the architecture of your product.

If you embed good security instincts early – even lightweight ones – you’ll never need a painful rewrite just to “lock it down” later.

Closing note

Vibe coding is powerful. It’s a creative shortcut that’s opening the door to a new generation of makers. But speed doesn’t have to mean recklessness.

If you can pause for just a few minutes before you start building, sketch the risks, and apply a few basic safeguards, you’ll build prototypes that are faster and smarter… the kind that can actually grow into real products.

See the full list of free resources for vibe coders!

Still have questions or want to talk about your projects or your plans? Set up a free 30 minute consultation with me!