After Vibe Coding: What Comes Next for AI Builders?

Vibe coding had a moment. Andrej Karpathy coined the term in February 2025, Collins Dictionary named it Word of the Year by November, and somewhere in between, millions of people discovered they could describe an app to an AI and watch it appear.

That’s genuinely remarkable. A year ago, building software required years of training or expensive developers. Now you can have a working prototype before lunch. The barriers fell fast.

But here’s what I’ve been seeing in my consulting work: a lot of those prototypes aren’t making it to production. Or they make it, and then something breaks, and the person who built it has no idea why. Or they work fine until there are real users, and then everything falls apart.

This isn’t a failure of vibe coding. It’s just what happens when you reach the edges of what any tool can do on its own.

So what comes next?

The Vibe Coding Ceiling

Let me be clear about what vibe coding does well. It’s fantastic for:

- Rapid prototyping

- Personal tools you’ll use yourself

- Exploring whether an idea is worth pursuing

- Learning by building

If you want to test a concept quickly, vibe coding is a superpower. You can validate ideas in hours that would have taken weeks or months before.

The ceiling appears when you need the thing to actually work reliably. When other people depend on it. When it handles real data, real money, real consequences.

That’s when you start hearing stories like:

“It worked perfectly in testing, but when we launched it crashed constantly.”

“I tried to add a simple feature and somehow broke everything else.”

“We got hacked and I don’t even know how.”

“The AI bill this month was $4,000 and I have no idea why.”

These aren’t edge cases. They’re the normal outcome when software gets built without certain decisions being made consciously.

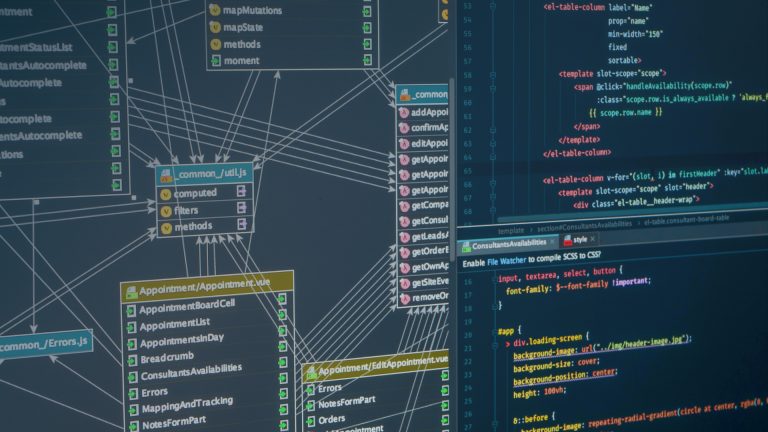

The Silent Decisions Problem

Here’s what most people don’t realize about vibe coding: when you prompt an AI to build something, you’re not just getting code. You’re getting dozens of decisions baked into that code.

Where should data be stored? The AI picked something.

How should users log in? The AI made a choice.

What happens when the database is unavailable? The AI decided (or didn’t).

How do different parts of the system communicate? The AI chose a pattern.

These aren’t minor implementation details. They’re architectural decisions that shape everything about how your software behaves, scales, breaks, and recovers.

When a professional software engineer builds something, they make these decisions explicitly. They think about tradeoffs. They choose based on the specific needs of the project.

When you vibe code, the AI makes these decisions implicitly, based on patterns in its training data. It picks something reasonable. But “reasonable in general” isn’t the same as “right for your situation.”

You don’t notice this at first. The app works. Then later, sometimes much later, you discover that some invisible decision is causing problems. And because you didn’t know the decision was made, you don’t know how to unmake it.

The Spectrum

I’ve started thinking about this as a spectrum, not a binary.

On one end, you have pure vibe coding: describe what you want, accept what the AI builds, don’t look too closely at how it works. This is fine for throwaway projects and personal experiments.

On the other end, you have traditional software engineering: understand every decision, write or review every line, full control and full responsibility. This is what professional development teams do for production systems.

Most people building with AI are somewhere in the middle, and that’s where it gets interesting.

I’ve been calling this middle ground “AI Builders.” Not because I think we need another term (we probably don’t), but because it’s useful to have language for the thing that’s emerging.

An AI Builder is someone who uses AI to create software but takes responsibility for the decisions underneath. They don’t need to write the code themselves, but they need to understand what’s being built and why. They work with AI as a collaborator, not just a vending machine.

The shift from vibe coder to AI Builder isn’t about learning to code. It’s about learning to decide.

What Changes

So what’s actually different when you move from vibe coding to intentional AI building?

You ask for options instead of solutions.

The vibe coding approach: “Build me a user authentication system.”

The AI Builder approach: “What are my options for user authentication? What are the tradeoffs between them? What would you recommend for an app with these characteristics?”

Same goal, different conversation. In the first case, you get an answer. In the second case, you get choices. And choices mean you’re the one deciding.

You think about the lifecycle, not just the build.

Vibe coding focuses on creation. You prompt, you build, you ship. Done.

But shipping is maybe 30% of the work. What about deploying it somewhere reliable? Knowing when it breaks? Keeping it running? Updating it when dependencies change? Adding features without breaking existing ones?

AI Builders think about the whole lifecycle from the start. Not because they’re paranoid, but because decisions made during building affect everything that comes after.

You know what you don’t know.

This might be the most important shift. Vibe coders often don’t realize how much is happening under the hood. AI Builders have enough awareness to know where the gaps are.

You don’t need to understand everything. But you need to understand enough to ask good questions, to know when you’re in over your head, and to recognize when something feels wrong even if you can’t articulate why.

You verify before you trust.

AI makes mistakes. Confident, plausible-sounding mistakes. The code looks right. The explanation makes sense. And it’s still wrong.

Vibe coding tends toward accepting outputs at face value. AI Building includes verification as a default. Not because you distrust AI, but because you understand how it works well enough to know that trust needs to be earned, not assumed.

The Skills That Transfer

Here’s some good news: the skills that make someone a good AI Builder aren’t new skills. They’re the same skills that have always mattered in building things.

Clear thinking about problems. What are you actually trying to solve? For whom? What does success look like?

Understanding tradeoffs. Every decision has costs and benefits. Nothing is free. What are you optimizing for, and what are you willing to sacrifice?

Asking good questions. The quality of your inputs determines the quality of your outputs. This was true before AI and it’s still true now.

Knowing what you don’t know. The most dangerous state is confident ignorance. The safest state is knowing where your knowledge ends.

Taking responsibility for outcomes. You can delegate the work, but you can’t delegate the accountability. If it breaks, it’s your problem to solve.

These aren’t technical skills. They’re thinking skills. And they’re learnable.

The Path Forward

If you’ve been vibe coding and you’re hitting the ceiling, you don’t need to go back to school for computer science. You don’t need to learn to write code from scratch. That’s the old path, and it’s not the only one anymore.

What you need is to develop judgment about the decisions that matter.

Which architectural choices will come back to bite you? Which ones are safe to let AI handle? When should you push back on AI’s recommendations? When should you trust them? What questions should you ask before you start building? What should you verify after?

This is learnable. It’s a different kind of learning than syntax and algorithms, but it’s not harder. In some ways it’s easier, because it builds on things you already know about your domain, your users, and your goals.

The tools are going to keep getting better. The AI models will improve. The platforms will mature. But the need for human judgment about what to build and why isn’t going away. If anything, it’s becoming more important as the building itself gets easier.

What I’d Tell a Friend

If a friend came to me and said “I’ve been vibe coding and I want to get more serious about it,” here’s what I’d tell them:

Start noticing the decisions. When AI builds something for you, ask yourself: what choices did it make? Where did it have options? You don’t need to understand the code, but you should understand the shape of what was built.

Get comfortable with “what are my options?” Make that your default prompt before “build me X.” Let AI be your analyst before it’s your builder.

Think about what happens after launch. Before you build anything, ask: how will I deploy this? How will I know if it’s working? What happens when something breaks? These questions will change what you build.

Find the boundaries of your knowledge. Where do you feel confident? Where do you feel lost? The lost feeling isn’t a problem to eliminate. It’s information about where to focus.

Don’t go it alone on the important stuff. For anything with real stakes, get a second opinion. That could be a developer friend, a consultant, or even just a different AI model. Fresh eyes catch things you’ll miss.

The goal isn’t to become a software engineer. The goal is to become someone who can build with AI responsibly. That’s a different thing, and it’s a thing that matters more every day as more people pick up these tools.

Vibe coding opened the door. The question now is what you build once you’re inside.